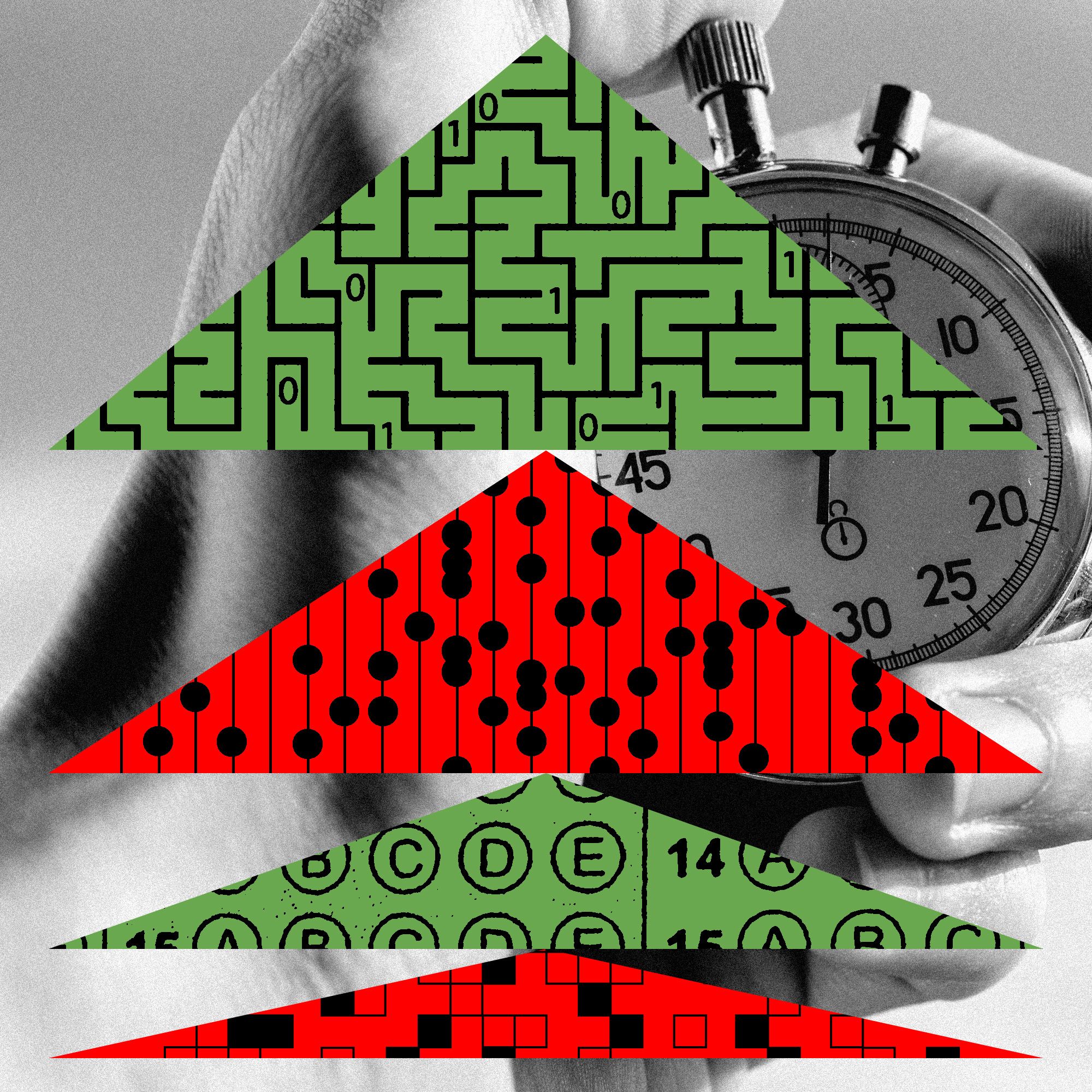

This Tool Probes Frontier AI Models for Lapses in Intelligence

Artificial intelligence (AI) has rapidly advanced in recent years, with more complex models pushing the boundaries of what machines can achieve. However, these sophisticated AI models are not infallible, as they can sometimes exhibit lapses in intelligence that may go undetected without proper scrutiny.

A new tool has been developed to specifically probe frontier AI models for such lapses in intelligence. By analyzing the decisions and patterns of these models, researchers can identify areas where the AI may be making errors or displaying biases that could have serious implications.

One of the key challenges in the field of AI ethics is ensuring that these powerful technologies are used responsibly and ethically. By using tools like this one to detect lapses in intelligence, researchers can work towards creating more reliable and trustworthy AI systems.

The implications of AI errors can be profound, especially in fields like healthcare, finance, and autonomous vehicles where decisions made by AI systems can have life-altering consequences. It is crucial to detect and address any lapses in intelligence before they lead to harmful outcomes.

Through ongoing research and development, the field of AI ethics continues to evolve, with tools like this one playing a crucial role in ensuring the responsible and ethical use of AI technology. By probing frontier AI models for lapses in intelligence, researchers are taking important steps towards building a more reliable and trustworthy AI ecosystem.

As AI continues to become more integrated into various aspects of our daily lives, it is essential to continuously monitor and evaluate the performance of these systems to prevent potential harm. Tools that can probe frontier AI models for lapses in intelligence are valuable assets in this ongoing effort.

In conclusion, the development of tools to probe frontier AI models for lapses in intelligence is a significant advancement in the field of AI ethics. By leveraging these tools, researchers can work towards creating AI systems that are more transparent, accountable, and aligned with human values.